Archive

The Operators and Monoids of CHP

When we create binary operators, in mathematics or in programming, they often have certain common identifiable properties:

- If you can re-order the arguments, e.g. 1 + 2 is the same as 2 + 1, we say that it is commutative — in contrast, division is not commutative.

- If you have two applications of the operator and the order of evaluation/bracketing doesn’t matter, e.g. (1 + 2) + 3 is the same as 1 + (2 + 3), we say that it is associative — in contrast, subtraction is not associative.

- If one particular operand always leaves the other side unchanged, we can say that this is the unit of an operator, e.g. 1 * x is the same as x, so 1 is the unit of multiplication.

- If one particular operand always ignores/overrides the other, we can say that this is the zero of an operator, e.g. 0 * x is the same as 0, so 0 is the zero of multiplication.

- If an operator has a unit or zero that only works on one side of the operator, we name it accordingly. For example, we say that division has a right-unit of 1 (because x / 1 is the same as x), but it does not have a left-unit; there is no value k such that for all x, k / x is the same as x.

We can find these properties all over maths and programming. Set union is commutative, associative, and has a unit of the empty set, but no zero. The boolean AND operator is commutative, associative, has the unit “true” and the zero “false”. STM’s orElse combinator is associative, with the unit retry, and the left-zero of a return statement. Any operator that is associative and has a unit forms a monoid, which can be put into Haskell as an instance of the Monoid type-class (more on that below).

The operators in CHP also have some of the aforementioned properties. A full list is buried at the back of the tutorial, but I should probably pull them into the API documentation. (Note that the laws I discuss here are concerned with the behavioural semantics of the operators; the types of the expressions may differ trivially.) The parallel operator <||> is commutative and associative, with a unit of skip, the process that does nothing and returns successfully. The unprioritised choice operator <-> is commutative and associative, with a unit of stop, the process that is never ready in a choice. The implication of choice and parallelism being associative and commutative is that the order of the items in a call to alt or runParallel doesn’t make any difference to the behaviour. The operators for wiring up a pipeline in the Utils module are associative but lack the other properties.

Poison Handler Properties

We can view the poison handlers `onPoisonTrap` and `onPoisonRethrow` as binary operators. To recap: `onPoisonTrap` runs the left-hand side, but if a poison exception occurs then the right-hand side is run. `onPoisonRethrow` does the same, but after the right-hand side has finished, the poison exception is rethrown. They are not commutative — in exception terminology, the first argument is the try and the second the catch; they cannot be swapped freely!

To my surprise, `onPoisonTrap` is associative. Abbreviating it to `oPT`, consider p `oPT` q `oPT` r. If you bracket the first two items, (p `oPT` q) `oPT` r, q will only execute if p throws poison, and r will only execute if q then throws poison (because p’s poison is trapped, so the only poison that can escape the first bracket is from q). If you bracket the latter two, p `oPT` (q `oPT` r), the brackets will only execute if p throws poison, which will pass control to q, which will only pass control to r if poison is thrown by q. So the semantics are associative.

In contrast, `onPoisonRethrow` is not associative. Abbreviating it to `oPR`, consider: p `oPR` skip `oPR` r. If bracketed (p `oPR` skip) `oPR` r, r will be executed if p poisons, but if bracketed p `oPR` (skip `oPR` r), r will never be executed (because skip won’t throw poison).

`onPoisonTrap` has a left-unit of throwPoison (because throwing poison automatically transfers control to the other side, the handler), and a right-unit of throwPoison (because trapping poison then throwing poison has a null effect on the original code). `onPoisonRethrow` has no left-unit but has two right-units: throwPoison and the return statement. Any code that cannot throw poison (e.g. a return statement) is a left-zero of both `onPoisonTrap` and `onPoisonRethrow` because it will never trigger the handler. Neither operator has a right-zero; there is no handler that can cause the original code to always be ignored.

Monoids

The fact that some of the operators mentioned here are associative and have units mean that they could form a monoid. In fact, CHP blocks of code could form several monoids. In Haskell, there is the problem that the monoid instance must be uniquely identified by its type, even though it is really its operator that is distinctive. All the standard number types can form a Monoid in addition (unit: 0, operator: +) or multiplication (unit: 1, operator: *). Defining a Monoid instance for, say, Int would thus be ambigious: when you say 4 `mappend` 3, would you expect 7 or 12? To solve this, the Data.Monoid module defines newtype-wrappers around types to identify the monoid. Sum Int is a monoid in addition, whereas Product Int is a monoid in multiplication.

I could use the same trick for CHP; I could define several monoid instances. Here is a monoid that allows blocks of code (with no useful return) to be joined in parallel:

newtype Par = Par {runPar :: CHP ()}

instance Monoid Par where

mempty = Par skip

mappend p q = Par (runPar p <|*|> runPar q)

mconcat = Par . runParallel_ . map runPar

This could be made a little more useful by making a parallel monoid out of blocks of code that return a type that is itself a monoid; when the parallel blocks of code have all finished, their results are combined using the monoid instance:

newtype ParMonoid a = ParMonoid {runParMonoid :: CHP a}

instance Monoid a => Monoid (ParMonoid a) where

mempty = ParMonoid (return mempty)

mappend p q = ParMonoid

(liftM (uncurry mappend) $ runParMonoid p <||> runParMonoid q)

mconcat = ParMonoid . liftM mconcat . runParallel . map runParMonoid

There is also a straightforward monoid instance for choice between blocks:

newtype Alt a = Alt {runAlt :: CHP a}

instance Monoid (Alt a) where

mempty = Alt stop

mappend a b = Alt (runAlt a <-> runAlt b)

mconcat = Alt . alt . map runAlt

Finally, there is a monoid built around `onPoisonTrap`:

newtype PoisonTrap a = PoisonTrap {runPoisonTrap :: CHP a}

instance Monoid (PoisonTrap a) where

mempty = PoisonTrap throwPoison

mappend a b = PoisonTrap (runPoisonTrap a `onPoisonTrap` runPoisonTrap b)

Consider the meaning of mconcat (map PoisonTrap [p,q,r,s]). It says run p; if no poison is thrown, that’s done. If poison is thrown, run q. If q throws poison, run r, and if that throws a poison, run s. Obviously this is quite excessive, but I had never thought of constructing such a function until I realised that `onPoisonTrap` was associative and thus could form a monoid.

I can’t recall seeing monoid instances like these (involving monadic actions), so perhaps these sorts of monoid instances on monads don’t end up being very useful (if you know of a particular use, please add a comment below). I find it interesting to see how CHP code can form several different monoids just as an exercise.

Poison: Concurrent Termination

All programs must come to an end. At some point, a program will need to terminate, either because it has finished its task, or because the user has told it to terminate — or perhaps because the program has encountered an unrecoverable error. Especially in the latter two cases (where the termination is unanticipated), the program may have files or sockets open and so we need to terminate it gracefully, tidying up all such externalities.

In Haskell, we differentiate between pure computations, and side-effecting computations. Pure computations, used by many parallel mechanisms, can be terminated at any time without needing to tidy up — or rather, all that needs tidying up is immediately apparent to the run-time. Side-effecting computations, which are used with concurrency mechanisms such as MVars, STM and our own CHP, need extra code to deal with termination.

Sequential imperative programs often deal with this via exception handlers — the termination becomes an exception that unwinds the call stack, tidying up all current work (call-stack entries) until it reaches the top of the program. Haskell’s error monads can provide similar functionality.

Concurrency introduces two issues that can make termination much more difficult. Firstly, there is the issue of notifying all concurrent threads about the termination. The termination usually originates in one concurrent thread — the thread that handled the user request, the thread that encountered the error, or the thread that was collecting the results of the work being done. This thread needs to tell all the other concurrent threads in the system that it is time to terminate — bearing in mind that the other threads might be busy doing something else (e.g. computation, reading from a file, waiting to communicate with other threads, and so on). Secondly, there is the problem that the program no longer consists of a single call stack that can be unwound. It instead consists of lots of different call stacks.

Haskell now has asynchronous exceptions, which can be used for terminating a concurrent system. Asynchronous exceptions are quite a neat idea, but they do require thought over the use of block and unblock to get the right semantics, as well as it being a little overwhelming as to when exceptions might occur (i.e. at any time!). Asynchronous exceptions also introduce a book-keeping problem: which thread is responsible for throwing to which thread? You could keep a registry of threads and make any thread wishing to terminate throw to all of them (a machine-gun approach to terminating the system), but then you may end up with race hazards if new threads are created by a still-running thread while you are attempting to kill off all the threads and so on. Furthermore, some threads may only be able to tidy up once their children threads have tidied up — for example, a thread may have initialised an external library and spawned children to work with the library, but may need to call a finalising function in the external library once all the children have tidied up — and not before.

CHP introduces poison as a way to terminate concurrent systems. The idea is that the communication/synchronisation network in your program already provides a way to link your program together. Using the channels to send termination messages is an approach fraught with race hazards (see the classic paper on the matter, although poison improves on the solution offered there) — poison is not about sending messages. In CHP you can poison a channel, barrier and clock (I’ll talk about channels here, but it applies equally to barriers and clocks) . This sets the channel into a poisoned state. Forever after, any attempt to use that channel will cause a poison exception to be thrown in the thread that made the attempt. If a process is waiting on a channel when the other end is poisoned, the waiting process is woken and the exception is thrown. Thus poison is really a system of synchronous exceptions, that can occur only when you attempt to communicate on a channel. This notifies your immediate neighbours (i.e. those with whom you share a channel) that they should tidy up and terminate. The key is that on discovering the poison, a process should poison all its channels (repeated poisonings of the same channel are benign, and will not throw a poison exception), thus spreading the poison around the network.

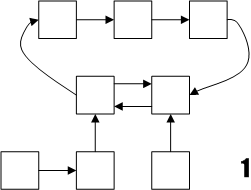

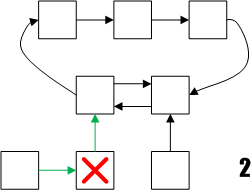

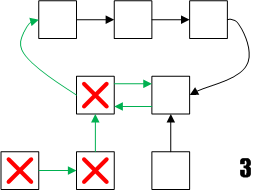

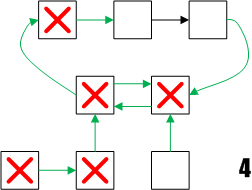

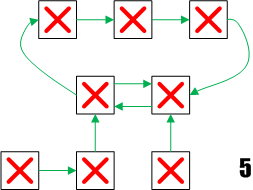

An example is shown in a sequence of diagrams below. The network begins unpoisoned (1), with boxes for processes and arrows for channels connecting them. The mid-bottom process introduces poison into its channels and terminates (2) — poisoned channels are shown green, and terminated processes with a red cross. Any process that notices the poison on any of its channels terminates and poisons its other channels (3,4) until the whole network has terminated (5). The poison may not happen to spread in such a lock-step fashion as shown here, but the ordering of termination using poison does not matter.

Poison should not deadlock a process network, because the ordering of poison does not matter, and once all the channels are poisoned, there is nothing further that a process can wait on without being woken up. There are some tricky corner cases with poison, but I will discuss those in another post. To show how poison is used in the code, I will use my recent prime number sieve example. Here was the code before:

import Control.Concurrent.CHP

import Control.Concurrent.CHP.Utils

import Control.Monad

filterDiv :: Integer -> Chanin Integer -> Chanout Integer -> CHP ()

filterDiv n input output

= forever $ do x <- readChannel input

when (x `mod` n /= 0) $

writeChannel output x

end :: Chanin Integer -> Chanout Integer -> CHP ()

end input output = do x <- readChannel input

writeChannel output x

(filterDiv x |->| end) input output

genStream :: Chanout Integer -> CHP ()

genStream output = mapM_ (writeChannel output) [2..]

primes :: Chanout Integer -> CHP ()

primes = genStream ->| end

main :: IO ()

main = runCHP_ $ do c <- oneToOneChannel

primes (writer c) <||>

(forever $ readChannel (reader c) >>= (liftIO . putStrLn . show))

This is a complete example program that runs forever spitting out primes. Let’s imagine that we want to stop the program after a time — for example, we may want only the first 100 primes. First, we must add a poison handler to each process. The filterDiv process shows the typical poison handler:

filterDiv :: Integer -> Chanin Integer -> Chanout Integer -> CHP ()

filterDiv n input output

= (forever $ do x <- readChannel input

when (x `mod` n /= 0) $

writeChannel output x

) `onPoisonRethrow` (poison input >> poison output)

That’s usually all that is involved — tacking an onPoisonRethrow block on the end that poisons all the channels that the filterDiv process is aware of. Here, the onPoisonRethrow block can either be outside the forever (which is broken out of by the poison exception) or inside the forever (rethrowing the poison exception would similarly break out of the forever). Our end process is more interesting, as it contains a parallel composition (via the |->| operator). These are the rules for parallel composition:

A parallel composition returns once all of its children have terminated (successfully, or through poison). Once they are all terminated, if any of them terminated because of poison, a poison exception is also thrown in the parent process.

So, back to our end process. There are two ways we could add a poison handler. This is the simple way, tacking on a poison handler as before:

end :: Chanin Integer -> Chanout Integer -> CHP ()

end input output = (do x <- readChannel input

writeChannel output x

(filterDiv x |->| end) input output

) `onPoisonRethrow` (poison input >> poison output)

This will give the correct effect; if any poison is encountered during the input or output on the first line of the do block, the handler will poison both channels. If any poison is discovered in the filterDiv or end sub-processes, the channels will similarly be poisoned. However, if any poison is discovered in the filterDiv or end sub-processes, they will already have poisoned the input and output channels. Again, this multiple poisoning is harmless.

We do not need to add handlers to genStream or primes; genStream only has one channel, so if poison is encountered there, all of its (one) channels must already be poisoned, and primes is merely a parallel composition of two processes that will deal with any poison encountered. So our final change is to main, to shut the program down after 100 primes have come out:

main :: IO ()

main = runCHP_ $ do c <- oneToOneChannel

primes (writer c) <||>

(do replicateM_ 100 $ readChannel (reader c) >>= (liftIO . putStrLn . show)

poison (reader c))

That poison command is what introduces poison into our pipeline. Thereafter, our poison handlers will take care of spreading the poison all the way along the pipeline and shutting down our whole process network without fear of deadlock. So primes exits from being poisoned, our prime-printing mini-process exits successfully, and thus the parallel composition (and hence runCHP_) also exits with poison. This is quite typical of a CHP program, and is not (necessarily) indicative of a failure.

Poison is a fitting way to terminate CHP programs, and poison handlers are fairly simple to write. It is a good idea to add them to all your CHP programs, but since they are very formulaic I will often leave them out of my blog examples so that they don’t get in the way of what I’m trying to explain.