Pieces of Yesod: Inverting a Haskell Function

I’ve recently been working with web frameworks, and decided to have a play with what Haskell has to offer for web resources. One of the several frameworks available is Yesod. Web frameworks need some sort of mapping between URLs and internal logic, usually called routing. In Yesod, routing is done with a “pieces” mechanism. You might declare in your routes:

/resources/#SortBy ResourcesR GET

And this will mean that if you access example.com/resources/d, that will translate into a call that turns the string "d" into a value of type SortBy, which is then passed to getResourcesR. It also means that if you use the syntax “@{ResourceR Date}” in your template files, this will be translated into example.com/resources/d. This translation is done is with the “pieces” classes, for example the single piece class:

class SinglePiece a where fromSinglePiece :: Text -> Maybe a toSinglePiece :: a -> Text

So a value should always be translatable into a part of a URL (i.e. Text), and an arbitrary URL part may be a valid value. Here’s a simple sorting-order type:

data SortBy = Date | Popularity | Title

And here’s the trivial SinglePiece instance:

instance SinglePiece SortBy where fromSinglePiece "d" = Just Date fromSinglePiece "p" = Just Popularity fromSinglePiece "t" = Just Title fromSinglePiece _ = Nothing toSinglePiece Date = "d" toSinglePiece Popularity = "p" toSinglePiece Title = "t"

There’s several drawbacks with this code. The major drawback is obvious: the code is repetitive! It expresses the same simple mapping from sort order to text twice, once in each direction. This is tedious. Another drawback is that is a potential source of bugs if I make a typo. SinglePiece does have an obvious property for testing: fromSinglePiece . toSinglePiece == Just. I also need to make sure I cover all the possible sorting values. The nice thing about toSinglePiece (rather than using a lookup table) is that if I use the GHC flags -fwarn-incomplete-patterns -Werror, it will warn me if I miss out a case. So if I add a new sort order, say Author, and forget to change toSinglePiece, I’ll get a compile-time error as soon as I try to compile (which is quicker even than a test). But fromSinglePiece doesn’t have the same advantage: if I forget a new case for Author, I’ll only know about it because of my test.

The way to fix my problems is to stop repeating myself. I can change the code to this:

instance SinglePiece SortBy where fromSinglePiece = invert toSinglePiece toSinglePiece Date = "d" toSinglePiece Popularity = "p" toSinglePiece Title = "t"

That invert function looks a bit magical! Broadly, its type is:

invert :: (a -> b) -> (b -> Maybe a)

Now, as it stands, the invert function is not implementable; there’s no way to arbitrarily invert a Haskell function. But we can brute force an inversion if we know the full range of inputs. That’s possible with the help of some automatically derivable Haskell type-classes:

data SortBy = Date | Popularity | Title deriving (Eq, Enum, Bounded) invert :: (Enum a, Bounded a, Eq b) => (a -> b) -> (b -> Maybe a) invert f = flip lookup $ map (f &&& id) [minBound..maxBound]

We use Enum and Bounded to get the list of all possible inputs, then form a lookup table which we can reference to find the inverse of a value. So now we’re able to write just toSinglePiece, and automatically derive fromSinglePiece. No repetition and less possible source of mistakes. The only problem is that invert might be a bit slow. Instinctively, it feels like the lookup table can’t be as fast as Haskell’s built-in case structure. To find out, let’s whip up a test using the Criterion benchmarking library.

I’m testing the performance of fromSinglePiece on the three valid URLs and one invalid, i.e.

main = defaultMain

[bench "benchName" $ nf (map fromSinglePiece :: [Text] -> [Maybe SortBy])

["d", "p", "t", "x"]]

I ran this once on the original fromSinglePiece that used a case statement (calling that “cases”), and once on the new fromSinglePiece that uses the lookup table (calling that “invert”), compiling with -O2 each time on GHC 7.0.3. Here’s the results:

benchmarking cases collecting 100 samples, 23951 iterations each, in estimated 1.011024 s bootstrapping with 100000 resamples mean: 423.0417 ns, lb 421.8167 ns, ub 426.2270 ns, ci 0.950 std dev: 9.136906 ns, lb 1.879618 ns, ub 16.88352 ns, ci 0.950 found 2 outliers among 100 samples (2.0%) 2 (2.0%) high severe variance introduced by outliers: 0.997% variance is unaffected by outliers benchmarking invert collecting 100 samples, 21664 iterations each, in estimated 1.011000 s bootstrapping with 100000 resamples mean: 466.8043 ns, lb 466.7298 ns, ub 466.9089 ns, ci 0.950 std dev: 448.0250 ps, lb 340.4309 ps, ub 723.9564 ps, ci 0.950 found 2 outliers among 100 samples (2.0%) 1 (1.0%) high severe variance introduced by outliers: 0.990% variance is unaffected by outliers

So the difference in speed is only around 10%. I can accept that cost in order to not repeat myself everywhere. Obviously, many types used with SinglePiece are not a trivial conversion between a type constructor and a text value, but for those that are, I found this trick useful.

Testing a Side-Effecting Haskell Monad

In this post I’m going to continue discussing testing CHP, this time focusing on the CHP monad. The monad is a bit complex (and indeed, there is a subtle bug in the current version of CHP to do with poison-handling) because it has several semantic aspects:

- it is an error-handling/short-circuiting monad (because it must obey the poison semantics),

- it has some extra semantics to do with choice that treats the leading action differently,

- underneath it all, there is the IO monad, which must be lifted correctly.

CHP is a monad with side-effects: quite aside from the communication with parallel processes, the presence of lifted IO means that it can have arbitrary side-effects. This means, if my terminology is correct, that we need a check for operational equality rather than denotational. Let’s explore this by considering testing an error-handling monad.

Testing An Error-Handling Monad

The Either type can be used as an error-handling monad by using the Left constructor to hold an error, and the Right constructor to hold a successful return. One property that should hold is that Left e >>= k == Left e. That’s an actual Haskell equality; we can test that property for any function k, and use actual equality on the result.

It’s also possible to use a similar error-handling monad transformer. The transformers library offers an ErrorT e m a type that allows you to wrap a monad “m”, using error type “e”, with successful returns of type “a” (with a function throwError :: e -> ErrorT e m a for introducing errors). We can test this using the Identity monad as type “m”, which would make sure the code was pure. But the semantics of this error transformer involve what actions occur in the error monad. For example, runErrorT (m >> throwError e >> n) should be equivalent to m >> return (Left e), but usually m (which is a monadic action) cannot be directly compared for equality.

Setting this in the IO monad makes it all much clearer: runErrorT (lift (putStrLn "Hello") >> throwError e >> lift (putStrLn "Goodbye")) should print Hello on the screen, and then finish with an error — and not print Goodbye. Testing this is difficult because we need to have a harness that can check for which side-effects occurred, making sure the right ones (e.g. printing Hello) did happen, and the wrong ones (e.g. printing Goodbye) didn’t happen.

This is exactly the situation that the CHP monad is in; we always have IO beneath the CHP monad (which we can’t swap out for a more easily testable monad!), so we must test equality of code by checking which side effects occurred. We need to check that liftIO_CHP (putStrLn "Hello") >> throwPoison >> liftIO_CHP (putStrLn "Goodbye") prints Hello then throws poison, and doesn’t print Goodbye. Printing text is a difficult side-effect to track in a test harness, but the nice thing about IO is that it has a whole host of side-effects to choose from! So I test by observing alterations to a Software Transactional Memory (STM) variable (TVar) — therefore my test inputs are of type TVar String -> CHP a.

Testing CHP’s Error-Handling

I use these two functions as a harness to execute the CHP code:

runCHPEx :: CHP a -> IO (Either SomeException a)

runCHPEx m = (Right <$> runCHP m) `C.catches`

[Handler (\(e::UncaughtPoisonException) -> return $ Left $ toException e)

,Handler (\(e::ErrorCall) -> return $ Left $ toException e)]

runCode :: (TVar String -> CHP a) -> IO (String, Either SomeException a)

runCode m = do

tv <- newTVarIO ""

r <- runCHPEx (m tv)

s <- atomically (readTVar tv)

return (s, r)

The runCode function gives back (in the IO monad) the String that was the final value of the TVar, and the result of running the CHP computation — either an uncaught poison/error exception or an actual return value. (One planned change to CHP semantics in version 3.0.0 is that uncaught poison gets translated into an exception, rather than giving runCHP a Maybe-wrapped return.) Here’s some example HUnit tests (with some useful helper functions):

obsTestPoison :: Test

obsTestPoison = TestList

[("ab", Right 'b') ==* \tv -> tv!'a' >> tv!'b'

,("", Left UncaughtPoisonException) ==* const throwPoison

,("a", Left UncaughtPoisonException) ==* \tv -> tv!'a' >> throwPoison

]

where

(!) :: TVar String -> Char -> CHP Char

(!) tv c = c <$ (liftIO_CHP $ atomically $

readTVar tv >>= writeTVar tv . (++[c]))

(==*) :: (String, Either UncaughtPoisonException Char)

-> (TVar String -> CHP Char) -> Test

(==*) x m = TestCase $ runCode m >>= assertEqual "" (onLeftSnd Just x)

. onLeftSnd fromException

where

onLeftSnd :: (b -> c) -> (a, Either b d) -> (a, Either c d)

onLeftSnd f (x, Left y) = (x, Left $ f y)

onLeftSnd f (x, Right y) = (x, Right y)

The left-hand side of the starred equality is the expected result. The right-hand side is the code (which is of type TVar String -> CHP Char) which should produce that result.

So by observing deliberately-placed side-effects in the code, we can check for equality in a side-effecting monad. These unit tests aren’t the only way I’m testing my monad though — in my next post, I’ll build on this to form a more advanced property-based testing technique.

Testing a Haskell Library

I’m currently gearing up for a CHP-3.0.0 release. Version 3 will be (internally) simpler in some places, faster in others, and has a slightly simplified API. The content of the library is mostly there — but I’m not yet ready to release because I want to write more documentation, and more tests.

Testing concurrent programs is notoriously tricky — the exact sequence of actions is non-deterministic, and sometimes bugs only occur when actions end up occurring at specific times. There's various aspects of CHP that particularly warrant testing:

- The central event-synchronisation mechanism (which underlies channels, barriers, choice and conjunction).

- The surrounding communication mechanisms (i.e. the exchange of data) for channels.

- The monad, poison, and all the associated semantics.

- Support for tracing.

- Wiring combinators (especially the four-way and eight-way grid wiring).

In this post, I’ll talk about the first item: testing the central event-synchronisation mechanism. This is absolutely crucial to the correct functioning of CHP — if there’s a problem in this algorithm, it will affect any code that uses CHP. The algorithm itself is effectively a search algorithm, which looks for events that can take place. The input to the search algorithm is scattered across several Software Transactional Memory (STM) TVars (Transactional Variables) thoughout the system, and the search takes place in a single transaction.

Testing STM-Based Algorithms

The really nice thing about testing an STM-based search algorithm is that we don’t actually have to worry about the concurrent aspects at all. An STM transaction is guaranteed to be free of interference from other transactions. In our test harness, we set up all the TVars to our liking, run the search transaction and look at the results — and this is a perfectly suitable test, even if the real system will have several competing transactions running at different times.

The Search Algorithm to be Tested

Our search algorithm looks at all of the processes and finds which events (if any) can currently synchronise. Each process makes a set of offers (or offer-set) — essentially saying that it wants to complete one of the offers. Each offer is a conjunction of events that need to be completed together. An event can complete if, and only if, the number of processes making an offer on it is equal to the event’s enroll count, and all the appropriate offers can complete. Time for an example! Let’s say we have processes p, q, and r which have the code:

p = readChannel c <&> readChannel d q = writeChannel c 0 r = writeChannel d 0 <|> (syncBarrier a <&> syncBarrier b)

Barrier “a” has an enrollment count of 1, barrier “b” has an enrollment count of 3, and the channels are all one-to-one, so they have a fixed enrollment count of 2. We say that p has an offer-set with two offers: one offer contains just “c”, the other offer contains just “d”. Process q has an offer-set with one offer, which contains just “c”. Process “r” has an offer-set with two offers: one contains just “d” and the other contains “a” and “b”. The answers to the search algorithm in this situation is that p and q can communicate together on channel c, or p and r can communicate on channel d.

Writing Individual Test Cases

So we have an example, but now we need to code that up. This involves creating events for a, b, c and d, setting their enrollment counts, recording all the offer-sets — and then running the search that we actually want to test. If we had to spell that out long-hand for every test, we would soon be bored stiff. So of course we make some helper functions to allow us to specify the test as simply and clearly as possible — in effect, we can create a testing EDSL (Embedded Domain Specific Language). Here’s my code that’s equivalent to testing our previous example:

testD "Blog example" $ do

[a,b,c,d] <- mapM evt [1,3,2,2]

p <- offer [c, d]

q <- offer [c]

r <- offer [d, a&b]

return $ (p ~> 0 & q ~> 0) `or` (p ~> 1 & r ~> 0)

The first line in the do-block creates our events with associated counts. The next lines make all the offers that we discussed. Note that the distinction between channels and barriers has vanished — that was useful for relating the example to actual CHP code, but underneath this, both channels and barriers use the event mechanism, which is what we’re actually testing here. The final line declares the possible results: either p will choose item 0 in its list of offers (that’s “c”) and q will choose item 0 (also “c”), or p will choose item 1 and r will choose item 0 (both “b”).

The nice thing here is that the test is easy to read, and corresponds very closely to what I wanted to express. Writing lots more tests is easy — which encourages me to do so, and thus my function ends up better tested. I’ve written about 25 of these tests, each aiming at testing different cases.

Implementation of my Testing EDSL

I’ll show some of the details here of how I put together my testing EDSL. The monad it uses is just a wrapper around the state monad, building up a list of events, and offers on those events:

newtype EventDSL a = EventDSL (State ([EventInfo], [CProcess]) a)

deriving (Monad)

data EventInfo = EventInfo {eventEnrolled :: Int}

deriving (Eq, Show)

type CProcess = [CEvent] -- The list of conjoined events

newtype CEvent = CEvent {cEvent :: [Int]}

Events and offers are represented simply by a wrapper around an integer (an index into the lists stored in our state) — we use newtypes to stop all the Ints getting confused with each other, and to stop accidental manipulation of them in the DSL. Our evt and offer functions are then trivial manipulations of the state:

evt :: Int -> EventDSL CEvent

evt n = EventDSL $ do (evts, x) <- get

put (evts ++ [EventInfo n], x)

return $ CEvent [length evts]

newtype COffer = COffer {cOffer :: Int} deriving (Eq)

offer :: [CEvent] -> EventDSL COffer

offer o = EventDSL $

do (x, ps) <- get

put (x, ps ++ [o])

return $ COffer (length ps)

To allow use of the ampersand operator in two different places (the input and the result), we add a type-class:

class Andable c where (&) :: c -> c -> c instance Andable CEvent where (&) (CEvent a) (CEvent b) = CEvent (a ++ b)

For the expected result, we use this data type:

data Outcome = Many [[(Int, Int)]]

Each item in the list is a different possible outcome of the test (when there are multiple results to be found, we want to allow the algorithm to pick any of them, while still passing the test). Each outcome is a list of (process index, chosen-offer index) pairs. We have some combinators for building this up:

(~>) :: COffer -> Int -> Outcome (~>) (COffer p) i = Many [[(p, i)]] instance Andable Outcome where (&) (Many [a]) (Many [b]) = Many [a++b] or :: Outcome -> Outcome -> Outcome or (Many a) (Many b) = Many (a ++ b)

Ultimately our test has type EventDSL Outcome, and we run the inner state monad, we get back: (([EventInfo], [CProcess]), Outcome). This forms the input and expected result for the test, which we feed to our helper functions (which are too long and boring to be worth showing here), which use HUnit for running the tests.

Property-based Testing

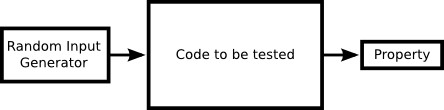

Probably Haskell’s most famous testing framework is QuickCheck (its cleverer cousin, Lazy Smallcheck, deserves to be just as well-known). This uses property-based testing: you specify a property and it generates random input, and checks that the property holds. This diagram is how I believe property-based testing is intended to work:

The size of the box is meant to indicate effort; the biggest bit of code is what you’re testing, you may need some effort to generate random input data, and then you check that some simple properties hold on the output.

So let’s think about some simple properties of our search algorithm. I can think of some:

- Any events that complete must have the same number of processes choosing them as the enroll count.

- Any events that don’t have enough processes offering definitely can’t complete.

- Processes can’t select an offer index that’s invalid (e.g. if p has 2 offers, it can’t select offer 3).

All of these properties seem to be to be pussyfooting around the main property of the search algorithm that I want to test:

- The search algorithm finds a correct answer when one is available.

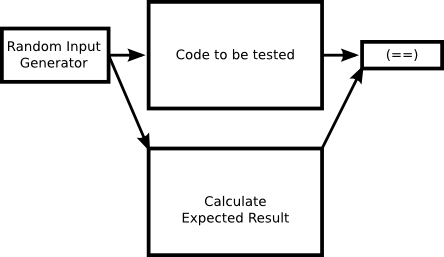

That certainly sounds simple. But how do we know what the possible correct answers to the search algorithm are? Well, we need to write a search algorithm! Often when I come to use QuickCheck, how it ends up working is this:

It feels a lot like double-entry validation; to calculate the expected result, I must code the same algorithm again! In this instance, I wrote a brute-force naive search that is checked against my optimised clever search. I’m not sure if this is still technically property-based testing, but QuickCheck is still handy here for generating the random input. (I guess a search algorithm is a bad choice for using property-based testing?) Regardless: I wrote my brute force search and tested my algorithm. It found no bugs, but now I have two sets of tests supporting me: one set of hand-crafted interesting tests, and a second version of the algorithm used with randomly generated input to pick up the cases I might have missed.

Summary

This post has been a bit light on technical detail, mainly because testing the algorithm involves a lot of dealing with the internals of my library that would take too long to explain here, and which would not be very interesting. My summary is this: constructing testing EDSLs makes your unit tests clearer to read and easier to write, which in turn encourages you to write more tests. I haven’t found property-based testing as useful as unit tests, but if you have a simple dumb solution and you want to check your optimised clever solution maintains the same behaviour, QuickCheck (or Lazy SmallCheck) is a handy way to test equality of functions.

Parallel Composition in Haskell

CHP, my Haskell concurrency library, allows you to run processes in parallel. One way of doing so is this binary operator:

(<||>) :: CHP a -> CHP b -> CHP (a, b)

Informally, the behaviour is as follows: this starts both processes running (using Haskell’s forkIO under the hood) and waits for them both to terminate and return their results. They can terminate at different times, but the parent process (the one running that composition) will wait for both to terminate before it returns. This parallel composition is not uncommon — for example, the parallel-io package provides a similar operation. In this post I’ll talk about the properties we would expect from this composition, and how to make them hold in CHP — but this easily generalises to making them hold for a parallel composition operator in the IO monad (CHP being a thin layer on top of IO).

Properties of Parallel Composition

I intuitively expect that parallel composition should be commutative: p <||> q should be the same as q <||> p. I’d also expect associativity; this:

do (x, (y, z) <- p <||> (q <||> r)

should be equivalent to:

do ((x, y), z) <- (p <||> q) <||> r

Another property (which I’ll call the unit law) is that composing something in parallel with a null (or more generally, a short-running) computation should have no effect, that is p should be equivalent to:

p <||> return ()

Finally, I’d expect independence; if I compose p in parallel with q, I would not expect p to behave any differently (or have its environment behave any differently) than if it was not part of a parallel composition. So there are our four properties: commutativity, associativity, the unit law and independence. Let’s consider the behaviour of our parallel composition, to try to make sure it satisifes all of these.

Normal Execution

For those, like me, who like concrete code to look at, a basic implementation of parallel composition that works with normal execution (we’ll add to it as we go along) is:

(<||>) :: CHP a -> CHP b -> CHP (a, b) (<||>) p q = liftIO_CHP $ do pv <- newEmptyMVar qv <- newEmptyMVar forkIO $ executeCHP p >>= putMVar pv forkIO $ executeCHP q >>= putMVar qv (,) <$> takeMVar pv <*> takeMVar qv

(We’re using liftIO_CHP :: IO a -> CHP a and executeCHP :: CHP a -> IO a to go between the monads.)

If all the processes being composed execute and terminate successfully, all the properties easily hold. All the processes start up, they all run, and when they all complete we get the results back. If one of the processes doesn't terminate, the properties also all hold — the composition waits for them all to terminate, so if p doesn't terminate, the whole composition will not terminate, no matter which order the processes are in, or how we've associated the composition.

Poison

(This is the only CHP-specific aspect of our parallel composition.) CHP processes don't have to terminate successfully; they can also terminate with poison. The semantics with respect to poison are simple, and preserve all our properties; if either process terminates with poison, the composition will terminate with poison once both child processes have finished. We can update our implementation, based around the adjusted type of executeCHP that now indicates whether a process terminated with poison:

data WithPoison a = Poison | NoPoison a

executeCHP :: CHP a -> IO (WithPoison a)

(<||>) :: CHP a -> CHP b -> CHP (a, b)

(<||>) p q = merge =<< liftIO_CHP (do

pv <- newEmptyMVar

qv <- newEmptyMVar

forkIO $ executeCHP p >>= putMVar pv

forkIO $ executeCHP q >>= putMVar qv

(,) <$> takeMVar pv <*> takeMVar qv)

where

merge (NoPoison x, NoPoison y) = return (x, y)

merge _ = throwPoison

This is very similar to our first definition, but it merges the values afterwards; they are only returned if neither side threw poison (otherwise poison is thrown). This again preserves all the properties, although associativity may require a little explanation. Consider p <||> (q <||> r). If p terminates with poison, the outer composition will wait for the inner composition to finish (which means waiting for q and r), then throw poison. If r terminates with poison, the inner composition will wait for q, then throw poison; the outer composition will wait for p to also finish, then throw poison. So the behaviour is the same no matter which part of the nested composition throws poison.

(Synchronous) Exceptions

The termination rules given for poison extend easily to exceptions (poison is really just a kind of exception anyway). If either process terminates with an exception (because it wasn’t trapped, or it was rethrown), the parent process will rethrow that exception. If both processes throw an exception, an arbitrary one of the two is thrown by the parent process. We make exceptions “beat” poison: if one process throws an exception and the other exits with poison, the exception is rethrown and the poison is dropped. Our slightly adjusted implementation is now:

(<||>) :: CHP a -> CHP b -> CHP (a, b)

(<||>) p q = merge =<< liftIO_CHP (do

pv <- newEmptyMVar

qv <- newEmptyMVar

forkIO $ wrap p >>= putMVar pv

forkIO $ wrap q >>= putMVar qv

(,) <$> takeMVar pv <*> takeMVar qv)

where

wrap proc = (Right <$> executeCHP proc) `catch`

(\(e :: SomeException) -> return (Left e))

merge (Left e, _) = throw e

merge (_, Left e) = throw e

merge (Right Poison, _) = throwPoison

merge (_, Right Poison) = throwPoison

merge (Right (NoPoison x), Right (NoPoison y)) = return (x, y)

The wrap function catches any untrapped exceptions from the process, and sends them back to the parent process instead of a result. The merge function prefers exceptions to poison, and only returns a result when neither side had an exception or poison.

(Another possibility for exceptions would have been ignoring the exceptions from the child processes; this would maintain commutativity, associativity and independence, but would have broken the unit law, because when p threw an exception, it would not be propagate if p was wrapped inside a dummy parallel composition.)

Asynchronous Exceptions

Asynchronous exceptions can be received by a thread at any time (unless you mask them), without that thread doing anything to cause them. CHP is a concurrency library with its own mechanisms for communicating between threads and terminating, so I don't usually use asynchronous exceptions in CHP programs. But that doesn't stop them existing, and even if you don't use them yourself (via killThread or throwTo) it doesn't stop them occurring; asynchronous exceptions include stack overflow and user interrupts (i.e. Ctrl-C). So we need to take account of them, and preferably do it in such a way that all our expected properties of parallel composition are preserved.

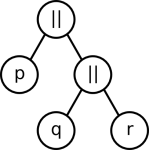

When we execute a nested parallel composition, such as p <||> (q <||> r), we actually have five threads running: one each for p, q, and r, one for the outer composition and one for the inner composition:

(Each circle is a thread.) We must assume, in the general case, that any one of these threads could potentially receive an asynchronous exception (e.g. the system can kill any thread it likes with an async exception, according to the docs).

Child Process Receives an Asynchronous Exception

Let’s start by discussing what happens if a child process such as q gets an asynchronous exception directly. One possibility is that q may trap the exception and deal with it. In that case, we're fine — the parallel composition never sees the exception, and since it's been trapped by q, it shouldn't escape from q anyway. But q may not handle it (or may handle and rethrow, which is the same from the point of view of our operator). So what should happen when q terminates with this exception? One possibility would be to immediately kill its sibling (or throw the exception to them). This would completely break independence; r would now be vulnerable to being killed or receiving an asynchronous exception initially targeted at another process, just because it happens to be composed with q. The only way to preserve independence is to treat the asynchronous exception in a child process as we do synchronous exceptions; we wait for both processes to terminate, and then the parent exits by rethrowing that asynchronous exception. This preserves commutativity, associativity (no matter which of p, q and r get the exception, all will be allowed to finish, and then the outer composition will end up rethrowing it, potentially via the inner composition doing the same) and independence. Our unit law is preserved if p receives the exception (see the next section for more discussion on the unit law).

Parent Process Receives an Asynchronous Exception

Now we move on to consider what happens if the parent process in a composition receives an asynchronous exception. This is actually one of the most likely cases, because if your program consists of:

main = runCHP (p <||> q)

If the user hits Ctrl-C, it’s the parent process that will get the exception, not p or q. We mustn't terminate only this thread and leave p and q dangling. The whole point of the parallel composition is that you scope the lifetime of the processes, and we don't want to break that. We could catch the exception, wait for p and q to terminate and then rethrow it. But if the exception is something like a user-interrupt then it seems wrong to ignore it while we wait for the oblivious child processes to terminate. So the semantics are this: when the parent process receives an asynchronous exception, it rethrows it to all the child processes. This trivially preserves commutativity. Independence is also satisfied; if the processes weren’t in the parallel composition, they would receive the exception directly, so rethrowing it to them is the same effect as if they weren’t composed together.

The unit law and associativity are in danger, though. Consider our unit law example: p <||> return (). If p does not trap the asynchronous exception that the parent throws to it, the unit law is preserved; p will get the exception, and then exit with it (regardless of the other side), which will cause the parent to exit with the same exception, so it is as if the composition was not there. If p does trap the exception, the behaviour becomes a race hazard with one of two behaviours (the difference is bolded):

- The parent receives the exception, and throws it to its children. The return () process has not yet terminated, this then terminates with an uncaught exception. p traps the exception and deals with it, but when the parallel composition exits, the exception is rethrown from the return () branch; this exception would not have been thrown if p was executing alone, because p would have caught it.

- The parent receives the exception, and throws it to its children. The return () process has already terminated succesfully. The exception is thus only thrown to p. p traps the exception and deals with it, so when the parallel composition exits, the exception is not visible, just as if p was executing alone.

There are two ways to “fix” this, by adjusting our unit law: one is to say that the unit law only holds if child processes do not trap exceptions. The other is to say that the unit of parallel composition is not return (), but rather:

return () `catch` (\(e :: SomeException) -> return ()

The problem with that is that catch is in the IO monad, and to be part of the parallel composition it needs to be in the CHP monad, which requires a lot of type-fiddling. I’m still thinking about this one for CHP, but if you were dealing with IO, changing the unit to the above would probably make most sense.

Associativity also has some caveats. Here’s our diagram again, with the outer composition at the top, and the inner composition its right-hand child:

If the outer composition receives an exception, all is well; the exception is thrown to the children, which includes the inner composition — and the inner composition throws to its children, so all the processes get it. If any of them don’t trap the exception, the exception will be the result of the whole composition no matter which way it was associated — and if all trap it, associativity is still preserved. However, if the inner composition receives an exception, associativity is not preserved; the inner composition will throw the exception to its children but the outer composition will not know about the exception immediately, so only the processes in the inner composition will see the exception. Now it matters which processes are in the inner composition and which is in the outer composition. But this seems morally fair: if an exception is thrown to an inner part of the whole composition, that already depends on the associativity, so it’s unreasonable to expect that the composition can function the same regardless of associativity.

Masking Asynchronous Exceptions

When defining our parallel composition operator, we also need to be careful about precisely when asynchronous exceptions might occur. One major difference between poison and asynchronous exceptions is that poison can only occur when you try to use a channel or barrier, whereas asynchronous exceptions can occur any time. This is a plus and minus point for both sides; it means poison is easier to reason about, but asynchronous exceptions can interrupt processes which are not using channels or barriers (e.g. that are blocked on an external call). To make sure we don’t receive an asynchronous exception at an awkward moment, such as inbetween forking off p and forking off q (which would really mess with our semantics!), we must mask against asynchronous exceptions, and only restore them inside the forked processes. You can read more about masking in the asynchronous exception docs. So, the final adjusted definition of parallel composition that I will give here is as follows (we don’t need a restore call around takeMVar because the blocking nature implicitly unmasks exceptions):

(<||>) :: CHP a -> CHP b -> CHP (a, b)

(<||>) p q = merge =<< liftIO_CHP (mask $ \restore -> do

pv <- newEmptyMVar

qv <- newEmptyMVar

let wrap proc = restore (Right <$> executeCHP proc) `catch`

(\(e :: SomeException) -> return (Left e))

pid <- forkIO $ wrap p >>= putMVar pv

qid <- forkIO $ wrap q >>= putMVar qv

let waitMVar v = takeMVar v `catch` (\(e :: SomeException) ->

mapM_ (flip throwTo e) [pid, qid] >> waitMVar v)

(,) <$> waitMVar pv <*> waitMVar qv)

where

merge (Left e, _) = throw e

merge (_, Left e) = throw e

merge (Right Poison, _) = throwPoison

merge (_, Right Poison) = throwPoison

merge (Right (NoPoison x), Right (NoPoison y)) = return (x, y)

(In fact, this isn’t quite enough. I’m currently adding a finallyCHP function that acts like its IO equivalent, and to support that I must push the restore call inside executeCHP to avoid asynchronous exceptions being acknowledged before the finallyCHP call is executed, but that’s a bit further into CHP’s internals than I want to go in this post.)

Hopefully that was a useful tour of parallel composition semantics in Haskell. Synchronous exceptions are easily dealt with, but asynchronous exceptions (which were perhaps designed more for the forking model of thread creation than this style of parallel composition) make things a fair bit trickier.

Communicating Haskell Processes: The Long And The Short Of It

The Long

It’s been a long time coming, but I have completed the final corrected version of my PhD thesis. It’s entitled, rather unimaginatively (and against received wisdom), “Communicating Haskell Processes”. (Communicating Haskell Processes, or CHP for short, is my concurrent message-passing library for Haskell.) A lot of what’s in there has been on this blog before. The new bits are the slightly revised CHP design (which will be in the forthcoming 3.0.0 release) and operational semantics in chapter 3. It’s freely available online for you to read, all 250+ pages.

The Short

Meanwhile, I’ve also put together a condensed version of the new material — including mobile (suspendable) processes, and automatic poison, neither of which I’ve written about anywhere else — into a paper for the Haskell symposium, which is a more palatable 12 pages. I’m not going to copy out the whole paper into this post, but here’s a quick summary of automatic poison.

Poison is a way to shut down a process network: processes should catch poison (which can occur if a channel you are using becomes poisoned) and poison all their own channels, spreading the poison throughout the network until all of the processes have shut down. The standard idiom is to surround your whole code with an onPoisonRethrow block that poisons the channels:

deltaOrig :: Chanin a -> [Chanout a] -> CHP ()

deltaOrig input outputs

= (forever $ do x <- readChannel input

parallel_ [writeChannel c x | c <- outputs]

) `onPoisonRethrow` (poison input >> mapM_ poison outputs)

Automatic poison puts all this poison handling into an autoPoison function that wraps your process with the poison handling, so for the above we can substitute this instead:

deltaAuto, deltaAuto' :: Chanin a -> [Chanout a] -> CHP ()

deltaAuto = autoPoison deltaAuto'

deltaAuto' input outputs

= forever $ do x <- readChannel input

parallel_ [writeChannel c x | c <- outputs]

I haven’t saved masses of code there, but I think the latter version is more readable. Read the paper for more details.

Feedback

Comments are welcome on the paper, which is due on Monday (6th June). Comments are slightly less welcome on the thesis, because it’s already been sent for binding! If there is a mistake, I’m happy to correct it in the online version.

Programming Language Fiction

The latest issue of The Monad Reader (an electronic magazine vaguely themed around Haskell) was released this week — but rather than featuring technical articles, it’s a special “poetry and fiction” edition. I decided to have a go at contributing, so I wrote a story about programming languages: in particular, what’s important in a programming language. It’s explored indirectly, via a discussion of one of my other interests: films. If you’re interested, take a look. I’m open to feedback on my contribution, and I imagine Brent (the editor) is also interested as to whether the special edition was a worthwhile experiment.

Interleaving

I spent some time late last year working further on what I originally called behaviours. It grew much longer than a blog post, so it’s ended up in the Monad.Reader electronic magazine, in the just-published Issue 17. Thanks are due to Brent Yorgey for his careful proof-reading and editing of the article. The accompanying code is already up on Hackage as the interleave package for those who want to play.

This week I’m also starting to prepare some slides; in two weeks’ time I will be presenting at PADL 2011 in Texas. That’s a talk that’s on the automated wiring that I’ve covered on the blog before. Once that trip is out of the way, I’m hoping to have a bit more time for Haskell coding again.

Sharp Cards in Haskell: The Odds

Recap

In a previous post I introduced a way to specify the action of drawing cards, culminating in the DrawM type:

data DrawM card a = DrawFail | Done a | DrawOne (card -> DrawM card a)

| DrawAny (DrawM card a)

So a draw can fail, succeed (with a result), require another card to be examined first or require any card to be drawn first. There is a monad instance that makes building items of this type quite straightforward. What it allows is not just to draw the cards using random chance, but also to calculate probabilities based on those specifications of drawing. In this post I’ll look at how to calculate those probabilities.

The Cards Type

Something that I omitted from my previous post was the definition of the Cards type. This represents a collection (deck) of cards. To aid in the way it is commonly used by the rest of the code, it is stored as a map from card to frequency, with cached card size:

data Cards a = Cards { cardsMap :: Map a Int, cardCount :: Int }

We can then define helpers functions such as this one to remove one card:

removeOneCard :: Ord a => a -> Cards a -> Cards a

removeOneCard x (Cards m s) = Cards (update (`maybeMinus` 1) x m) (s - 1)

maybeMinus :: Int -> Int -> Maybe Int

maybeMinus x y | x <= y = Nothing

| otherwise = Just (x - y)

There is also this function that is like fmap (but we can’t declare an actual Functor instance due to the Ord constraint):

mapCards :: (Ord b) => (a -> b) -> Cards a -> Cards b mapCards f (Cards m s) = Cards (mapKeysWith (+) f m) s

Calculating the Odds

The strategy for calculating the probabilities for a draw (i.e. something of type DrawM card a) is fairly straightforward. In the cases where the draw requires another card, we give it another distinct card from the deck — and keep doing this until it fails or is done. But we can make a couple of optimisations.

If we use the Event type that I explained in a previous post for doing the card drawing, we end up calculating the Rational probability for all the different outcomes, then summing them all afterwards. Adding two rationals is a slow process: it involves multiplication to get a common denominator, then a simplification of the fraction — for each addition. Adding thousands or millions of rationals will get expensive.

In this case, however, the fractions involved will be fairly regular. The chance of a card on the first draw will be that card’s frequency divided by the total number of cards (e.g. 4/52 probability of getting an ace), so we have different numerators over a common denominator. Similarly for the second card, that will be the appropriate adjusted frequency divided by the total number of cards minus one, and so on. For example, the chance of getting an ace on the second card is 4/52*3/51 + 48/52*4/51, or to put it another way: (4*3 + 48*4)/(52*51); the denominator will always be common for the same number of cards drawn. So the denominator at any given point will be n!/(n-d)! where d is the number of cards drawn (aka depth), or to put it in Haskell: product [(n-d+1)..n].

We capture this regularity in the denominator by changing how we store the probabilities. Instead of using an intermediate structure like Map a Rational (i.e. a map from outcome to fractional probability), we use Map Int (Map a Integer): the outer map is from number of cards drawn (aka depth, or d) and the inner map is from outcome to the numerator of the fraction; the depth inherently determines the denominator, as we have seen. Here’s the code:

chanceMap' :: (Ord a, Ord b) => Int -> Cards a -> DrawM a b

-> Map Int (Map b Integer)

chanceMap' n cards (Done x)

| cardCount cards >= n = singleton 0 (singleton x 1)

| otherwise = empty

chanceMap' _ _ DrawFail = empty

chanceMap' n deck (DrawAny m) = chanceMap' (n+1) deck m

chanceMap' n deck (DrawOne f)

= mapKeysMonotonic (+1) $ unionsWith (unionWith (+))

[fmap (toInteger firstCount *) <$>

chanceMap' n (removeOneCard firstCard deck) (f firstCard)

| (firstCard, firstCount) <- toList $ cardsMap deck]

We’ll come back to the Int parameter momentarily. If the draw is done already, we return a map from 0 depth to the outcome mapped to a frequency count of 1. If the draw failed, our map is empty. The Any case is related to the integer, so we’ll leave that aside. The last case is if (at least) one more card is needed. The list comprehension explores all the distinct cards (with associated frequencies) from the deck: in each case, we recurse with the deck minus the card we drew, and feeding the card to the draw to see what the outcome is. We merge the maps for the different cards by unions that sum the counts inside the inner map, and then we add one to all keys because everything we processed is actually one deeper than it thought it was. This key-changing operation is why I use Map Int instead of IntMap, although I guess I could pass the depth down the tree instead of building it in on the way back up. I haven’t profiled to check the difference.

The Int parameter is an optimisation. If at some point we need to draw any card from the deck and ignore it, that doesn’t actually affect the odds of what comes afterwards, provided there are still enough cards in the deck to complete the draw. This is evident in Pontoon: there are many cards drawn after yours and given to other players, but if you don’t know what they are, that doesn’t change the odds of the next cards you draw. So our optimisation is this: if you do draw any card from the deck, then instead of actually drawing, we just keep count of how many cards you wanted to do this with. As long as when we’re finished drawing, there are enough cards to draw and discard (which we keep track of with the Int parameter), that’s fine and the odds are unaffected. If there aren’t, the draw fails (as it would have done if we’d drawn the cards at the specified time).

Further Processing

Let’s take the example of drawing an ace in the first three cards from a full deck. We can specify this using our DrawM type thus:

drawUntil (== Ace) (Just 3) >> return ()

The paths that lead to a successful draw involve finding one of 4 aces (in 52 cards) or one of the other 48 (of 52) followed by one of the 4 (of 51) aces or one of the 48 (of 52) followed by one of the 47 (of 51) followed by one of the 4 (of 50) aces. Note how the denominators are determined by the depth, as previously discussed. The map returned by chanceMap’ therefore looks like this:

fromList [(1, singleton () 4), (2, singleton () (48*4)),

(3, singleton () (48*47*4))]

What happens to that is coded in the outer function:

chanceMap :: (Ord card, Ord a) => Cards card -> DrawM card a -> Map a Rational

chanceMap deck m = (% head permutes) <$> unionsWith (+) $ elems $

mapWithKey (\k v -> fmap ((permutes !! k) *) v) depthToCount

where

depthToCount = chanceMap' 0 deck m

maxDepth = maximum (0 : keys depthToCount)

deckSize = cardCount deck

permutes :: [Integer]

-- A lookup for (deckSize `permute`) . (maxDepth -)

permutes | maxDepth == 0 = [1, 1]

| otherwise = reverse $ 1 : scanl1 (*)

[toInteger (deckSize - maxDepth + 1) .. toInteger deckSize]

Let’s pick through it. depthToCount calls chanceMap' to get the aforementioned map. We use a slight optimisation to share the permutes function. Let’s briefly use an example of drawing up to 5 cards from 52. We will want the values 48, 49*48, 50*49*48, 51*50*49*48 and 52*51*50*49*48 at various points; there’s no point duplicating the calculation involved, so we use scanl1 to calculate the last number in that list, but keep track of the intermediary values encountered along the way.

When we get back our earlier map for the probability of drawing aces fromList [(1, singleton () 4), (2, singleton () (48*4)), (3, singleton () (48*47*4))], we multiply each value in the inner map by the permutes value for its depth. For 3, which was the maximum depth, that’s actually 1. For 2 we multiply by 50, and for 3 we multiply by 51*50. So we end up with the calculation ((4 * 51*50) + (48*4 * 50) + (48*47*4 * 1)) / (52*51*50). This is just the standard way of calculating 4/52 + (48*4)/(52*51) + (48*47*4)/(52*51*50), by converting to a common denominator. The division and simplification only ever happens once; all the other operations are multiplication and addition. Our calculation simplifies to the Rational value 1201%5525, incidentally.

Shortening the Odds Calculation

So how long does this calculation take? For a game with, say, nine distinct cards then no matter how many times those nine distinct cards appear in the deck, to examine all paths of drawing, say, 5 cards is in the worst case (i.e. where no draw fails early) going to look at 9*8*7*6*5 different sequences, or around 17,000. However, for a deck of playing cards with 52 distinct cards, the same calculation is 52*51*50*49*48 or a bit over 300,000,000. Ouch! Playing cards are actually the degenerate case, in my opinion: I struggle to think of any other card system where each card is distinct (and only appears once). But they are quite widely used, so it would be nice to optimise where possible.

There is one further class of optimisations that can be made, but it must be made by the user of the library. Consider the Pontoon example. We were looking for certain combinations of ranks, but we didn’t care about suit at all. So rather than operate on some deck :: Cards PlayingCard, we can use the mapCards function to get: mapCards rank deck :: Cards Rank. In this latter deck of cards, we still have 52 cards, but only thirteen distinct: Two through Ace (or Ace through King, if you prefer), each with a frequency of four. This means that examining all possible cards requires the worst case of 13*12*11*10*9 sequences, or around 150,000 — an improvement by a factor of 2,000 on examining all the 52 separately! You’ll note that our original specifications in the previous post already feature this optimisation and use DrawM Rank a instead of DrawM PlayingCard a. In general, reducing the number of distinct cards like this will always produce a large speed-up. However, it cannot be done by the library itself because we can’t know which aspects the drawing function will be interested in examining.

The work described in this post and the last features in the new game-probability-1.1 release on Hackage.

Sharp Cards in Haskell: Drawing Cards

Following on from my previous post on dice, in this post I’ll look at the support for cards that I am going to add to the next release of my game-probability library. So, cards: to work out the probability of drawing a particular sequence of cards from a deck of cards, you first need to specify which sequences you are interested in. How might you specify this, in Haskell? Here’s one way:

type DrawSpec card = (Int, [card] -> Bool)

That’s the sequence length paired with a function that decides whether a given sequence of that length is one we are interested in. There are two problems with this solution, though. Firstly, it requires fixing the length of the sequence upfront; we cannot decide how many cards to continue drawing based on the ones we have drawn. Secondly, it needs to be fed all the cards it requires at once, which means we must supply it with each possible permutations of cards separately, which will be quite inefficient.

Iterative Drawing: DrawF

Both of these problems can be solved with a more iterative approach:

data DrawF card = DrawFail | Done | DrawOne (card -> DrawF card)

| DrawAny (DrawF card)

So we have several options for the state of a draw: it can be successful, it can have failed, or it can need another card: DrawAny is like DrawOne . const, but can be much more efficient, as we’ll see in a future post. Let’s say we want to specify drawing N cards that are the same:

drawSameF :: Eq card => Int -> DrawF card drawSameF n = if n <= 0 then DrawFail else DrawOne (drawMatch (n-1)) drawMatchF :: Eq card => Int -> card -> DrawF card drawMatchF 0 _ = Done drawMatchF n tgt = DrawOne (\x -> if x == tgt then drawMatchF (n-1) tgt else DrawFail)

With these sorts of specifications, we can much more easily narrow down the possibilities. Imagine you have a deck with multiple copies of each of ten distinct cards and you want to know the chance of drawing 5 the same. You can feed all ten to this function and get ten DrawOne results, which can be thought of as ten branches in a search. Then when you feed the ten cards again to each of those branches, only one card will not cause a failure, so you will still have ten branches. And the same the next time. So you will try 10*(10+10+10+10) cards in all: 400. This compares well to the 10^5=10,000 combinations you would trawl through if considering all possible permutations.

You can use this functional-style (hence the F suffix) Draw type to describe all draws relatively easily, using some function like this at the top level to ask for the probability of achieving that draw given a collection of cards:

chance :: [card] -> DrawF card -> Rational

Expanding the draw: DrawM

But there is one slight limitation. Let’s imagine that you want to return some result from the draw; for example, you may want to know what card it was that you drew many of, or you may want to return a count of cards. Currently DrawF has no way to return such a result. But we could add one by adding a value to the Done constructor:

data DrawM card a = DrawFail | Done a | DrawOne (card -> DrawM card a)

| DrawAny (DrawM card a)

As you may guess by the new M suffix, and important result of this is that we can make the type a monad. The instance practically writes itself:

instance Monad (DrawM card) where return = Done (>>=) DrawFail _ = DrawFail (>>=) (Done x) k = k x (>>=) (DrawAny m) k = DrawAny (m >>= k) (>>=) (DrawOne f) k = DrawOne (\x -> f x >>= k) fail _ = DrawFail

We use the Done constructor for returning values, and we make DrawFail short-circuit the draw, ignoring anything that comes after. We push the binds for DrawOne and DrawAny inside their constructors. There are several useful helper functions we can define in this new DrawM type:

draw :: DrawM card card draw = DrawOne return drawAny :: DrawM card () drawAny = DrawAny (return ()) drawWhere :: (card -> Bool) -> DrawM card card drawWhere f = DrawOne (\x -> if f x then return x else DrawFail)

I couldn’t decide whether Done or return was clearer above, so swap them mentally if that helps. The drawMatch function from earlier goes from recursion to replicate:

drawMatch :: Eq card => Int -> card -> DrawM card () drawMatch n tgt = replicateM_ n (drawWhere (== tgt))

I like the monadic interface; drawing cards is inherently a sequence of steps, so seeing it written in do-notation makes it clearer than the nested style of the earlier type.

An Example: Pontoon

As an example, we’ll use Pontoon, a British variant on Blackjack. The general idea is the same as Blackjack (be closest to 21, but not over), with an added rule about five-card tricks (five cards with a total <= 21), which beat any non-Pontoon hand (Pontoon being 21 with two cards). We’ll leave aside the rules on splitting a pair to keep the example short. We’ll start with some quick definitions of a deck of playing cards (some Haskell that could come out of a beginner’s text-book):

data Rank = Two | Three | Four | Five | Six | Seven | Eight | Nine | Ten

| Jack | Queen | King | Ace

deriving (Bounded, Enum, Eq, Ord, Show, Read)

data Suit = Clubs | Diamonds | Hearts | Spades

deriving (Eq, Ord, Show, Read)

data PlayingCard = PlayingCard {rank :: Rank, suit :: Suit}

deriving (Eq, Ord, Show, Read)

deck :: Cards PlayingCard

deck = makeCards [PlayingCard r s | r <- [minBound .. maxBound],

s <- [Clubs, Diamonds, Hearts, Spades]]

Now we can move on to the Pontoon-specific parts. First we will need a function that calculates the possible totals of a set of cards. Since aces can be low (worth 1) or high (worth 11) at the player’s choice, there are potentially multiple different totals that each hand can have:

values :: [Rank] -> [Int] values [] = [0] values (Ace:xs) = concat [[1 + v, 11 + v] | v <- values xs] values (x:xs) | x `elem` [Jack, Queen, King] = map (10 +) (values xs) | otherwise = map ((fromEnum x + 2) +) (values xs)

The slightly awkward bit is the fromEnum part; since fromEnum Two == 0, we have to add 2 to get the right value. We can also define a data-type to hold the different non-bust results for a hand in Pontoon:

data Result = StuckOn Int | FiveCardTrick | Pontoon deriving (Eq, Ord, Show)

Note that the derived Ord instance will actually give a proper ordering of which hand is best: pontoon beats five-card trick beats the other results which are ordered by their numerical result (higher is better).

Pontoon Draw Strategy

The missing part is now a function to represent drawing cards in Pontoon. We’ll define a function corresponding to a fairly simplistic strategy: while we haven’t reached exactly 21 (or a five-card trick) with our hand, we’ll keep drawing as long as we’re below a constant upper limit (e.g. 15):

drawStrategy :: Int -> DrawM Rank Result

drawStrategy stickMin

= do firstTwo <- replicateM 2 draw

if 21 `elem` values firstTwo

then return Pontoon

else keepGoing firstTwo

where

keepGoing cur

| all (> 21) totals = badHand -- Bust!

| length cur == 5 = return FiveCardTrick

| 21 `elem` totals = return $ StuckOn 21

| any (< stickMin) totals = do {c <- draw; keepGoing (c:cur)}

| otherwise = return $ StuckOn (maximum $ filter (<= 21) totals)

where

totals = values cur

To begin with, we draw two cards. If we can immediately achieve 21, that’s Pontoon and we stop. Otherwise we consider whether to keep drawing. We then have five choices listed in order (the guards of the inner function). If every possible total is above 21, we’re bust (badHand = DrawFail). If we have five cards (and aren’t bust), we have a five-card trick and we stop. If we’ve otherwise hit 21, we have a normal 21 with either three or four cards. The final two guards cover our decision: if we’re below our upper limit we keep drawing, and otherwise we stick with the best total we can manage that’s 21 or under.

So that fairly neatly lays out all the possibilities involved in drawing a hand of Pontoon. Now that we have this specification, we can calculate some odds. I’ll show the code and results here, but I will return to how the probabilities are calculated in my next post (to avoid this one growing too long):

main :: IO ()

main = do print $ to3dp <$> chanceMapOn rank deck (optional $ drawStrategy 14)

print $ to3dp <$> chanceMapOn rank deck (optional $ drawStrategy 16)

print $ to3dp <$>

compareEvent (eventDrawOn rank deck (optional $ drawStrategy 14))

(eventDrawOn rank deck (optional $ drawStrategy 16))

where

to3dp :: Rational -> String

to3dp x = printf "%.3f" (fromRational x :: Double)

The optional function effectively maps successful draws into Just values and failed draws (i.e. bust) into Nothing; see the notes at the end of this post if you want more detail. This code will print three lines (which I’ve re-flowed below): the first will have a map from result to probability (which by that point will be a String) when we draw with less than 14, and the second will be the same when we draw with less than 16; the third will give the probability of the first strategy producing a worse, equal or better result than the second:

fromList [(Nothing,"0.086"), (Just (StuckOn 14),"0.125"), (Just (StuckOn 15),"0.122"), (Just (StuckOn 16),"0.114"), (Just (StuckOn 17),"0.110"), (Just (StuckOn 18),"0.101"), (Just (StuckOn 19),"0.095"), (Just (StuckOn 20),"0.135"), (Just (StuckOn 21),"0.050"), (Just FiveCardTrick,"0.013"), (Just Pontoon,"0.048")] fromList [(Nothing,"0.216"), (Just (StuckOn 16),"0.132"), (Just (StuckOn 17),"0.128"), (Just (StuckOn 18),"0.119"), (Just (StuckOn 19),"0.113"), (Just (StuckOn 20),"0.152"), (Just (StuckOn 21),"0.069"), (Just FiveCardTrick,"0.022"), (Just Pontoon,"0.048")] fromList [(LT,"0.485"), (EQ,"0.097"), (GT,"0.418")]

So even though we can see from the first two sections that the first strategy (drawing again when less than 14) is much less likely to leave you bust (the Nothing outcome), we can see from the third section that in general it is more likely to lose (the LT outcome) against the second strategy (drawing again when less than 16) than win (the GT outcome). That’s a useful result, from what I hope is relatively readable code!

In the next post, we’ll take a look at how the probabilities are calculated.

Notes

- The DrawM monad is a little reminiscent of iteratees, with all chunks being size 1. Generally, iteratees are designed to receive chunks of data as they become available. What this work does is take a DrawM item and supply with it many different possible next inputs to see what happens. I don’t know if much has been done with iteratees in this vein: it is somewhat like fuzzing iteratees to see what happens with different data.

- This work looks at permutations of cards rather than combinations. Obviously looking at combinations could be much faster since there are a lot less of them (factorial in the number of cards drawn). Permutations is the more general case, and allows for testing based on the sequence of cards. In the Pontoon example, the sequencing and choice of drawing more is vital. In other scenarios, it isn’t needed, and examining all permutations merely adds computational overhead: win some, lose some.

- The Pontoon example combines two aspects: one is the rules on scoring and stopping, and the other is the strategy for when to draw more. This is primarily convenience for the example: it would not be hard to alter the function to not take the stickMin parameter, and instead take a function that replaces the entire any (< stickMin) totals guard, thus parameterising the function by the user-defined strategy.

- The optional call in the example uses the Alternative instance for DrawM card, which allows choice between draws to be expressed, and is given here:

instance Alternative (DrawM card) where empty = DrawFail (<|>) DrawFail x = x (<|>) (Done x) _ = Done x (<|>) (DrawAny m) (DrawAny n) = DrawAny $ m <|> n (<|>) (DrawOne f) (DrawOne g) = DrawOne $ \x -> f x <|> g x (<|>) (DrawAny m) (DrawOne g) = DrawOne $ \x -> m <|> g x (<|>) (DrawOne f) (DrawAny n) = DrawOne $ \x -> f x <|> n -- Done or DrawFail on RHS: (<|>) (DrawAny m) n = DrawAny $ m <|> n (<|>) (DrawOne f) n = DrawOne $ \x -> f x <|> n

The top few cases are straightforward: the empty choice is to fail, choosing between failure and anything will be anything, and choosing between success and anything will be success — the docs for Alternative don’t specify this, but I always take the operator to be left-biased. If we choose between DrawAny and DrawOne, we do need to know what card is next because the DrawOne needs to know. However, in all the other cases, neither side cares what the card is, so we can stick with DrawAny.

Nice Dice in Haskell

Carrying on my theme of (board/card) game design, I recently wanted to play around with probabilities and dice. I wanted to find out the frequencies of the outcomes for various combinations of dice, and things like the probability of getting a repeated result on that combination. I began to envisage a neat way to represent dice in Haskell — and then a quick google led to discovering that the representation had already been thought of, published and released on Hackage. That saves a fair bit of work, at least. I lightly wrapped the probability library with the API that I wanted, and just released my game-probability library/EDSL on Hackage. The library has documentation and examples; I will focus more on looking at the design rationale in this post.

The Dice API

The central type is EventM a, which is a probabilistic event with outcomes of type a: each EventM a contains one or more outcomes of type a, each with an associated probability stored as a Rational. That means we get exact fractions, so 1/6th really is stored as 1/6th, not the nearest approximation that a Double can manage. For those who are interested in how EventM relates to the underlying probability library, it builds on the data-type from Numeric.Probability.Distribution:

import Numeric.Probability.Distribution (T, decons, norm, uniform) newtype EventM a = EventM (T Rational a) type DieRoll = EventM Int

With the aid of a helper function we can immediately define lots of standard dice:

makeEvent :: [a] -> EventM a makeEvent = EventM . uniform d4, d6, d8, z10, d10, d12, d20, d100 :: DieRoll d4 = makeEvent [1..4] d6 = makeEvent [1..6] d8 = makeEvent [1..8] z10 = makeEvent [0..9] d10 = makeEvent [1..10] d12 = makeEvent [1..12] d20 = makeEvent [1..20] d100 = makeEvent [1..100]

For those who don’t know their dice, d6 is a standard abbreviation for a 6-sided die. d10s have 0 to 9 written on the faces, and depending on the game the zero may either be read as 0 or as 10: d10 is the 1-10 interpretation, while z10 is the 0-9 interpretation. This is consistent with dice notation (wikipedia really does have a page for everything!).

When you try to show a die you get a faithful but not super-readable representation of a die:

> d6 fromFreqs [(1,1 % 6),(2,1 % 6),(3,1 % 6),(4,1 % 6),(5,1 % 6),(6,1 % 6)]

We can improve on that by customising the Show instance for our EventM wrapper. What would be really nice is a more visual representation of the chances associated with each event. To that end, I’ve chosen to display a horizontal bar-chart of outcomes with their associated probabilities. We find a common denominator for all the different probabilities, scale them up to integers and print them out with the corresponding bars. Now when we ask to see a die, we get a nicer visualisation:

> d6 1: # 2: # 3: # 4: # 5: # 6: #

Of course all the standard dice above have an equal chance of getting each number, so they’re quite dull. What we really want it for is more complex combinations, like for example the sum of a d4 and a d6:

> d4 + d6 2 : # 3 : ## 4 : ### 5 : #### 6 : #### 7 : #### 8 : ### 9 : ## 10: #

Note that we added two dice together there! The DieRoll type has a Num instance which allows dice to be added together. Intuitively, adding two dice adds their outcomes, and works out the corresponding probability for each summed outcome. We’ll come back to the details of the Num instance later, but we can also add constant factors to the dice:

> d4 + 1 2: # 3: # 4: # 5: #

And we can multiply by a constant:

> d6 * 2 2 : # 4 : # 6 : # 8 : # 10: # 12: #

Note that 2 * d6 is the same as d6 + d6, while d6 * 2 scales the outcome of the d6 by 2. So multiplication of dice is not commutative! This fits with how dice are usually written, and saves some confusion at the expense of causing other confusion (we’ll come back to this aspect later as well):

> 2 * d6 2 : # 3 : ## 4 : ### 5 : #### 6 : ##### 7 : ###### 8 : ##### 9 : #### 10: ### 11: ## 12: #

We can actually allow rolling of the dice using a small roll :: DieRoll -> IO Int function:

> replicateM 10 (roll d6) [6,5,6,2,5,5,2,3,5,3]

With a little bit of point-free Haskell (which I’ll leave you to dig through if you’re interested) we can check that the frequencies roughly pan out if we roll lots of times:

> map (head &&& length) . group . sort <$> replicateM 10000 (roll d6) [(1,1701),(2,1681),(3,1669),(4,1619),(5,1667),(6,1663)]

So that sorts out rolling the dice. However, the benefit of a reified probability representation is that we can also perform queries on the resulting distributions.

Equality

A DieRoll stores probabilities associated with each outcome. We can use these to prove equality of different dice rolls. A simple example:

> d6 + d6 + d6 == 3 * d6 True

Returning to our earlier mention of d10 and z10, one way to get from a z10 to a d10 is simply to add one to the former:

> z10 + 1 == d10 True

That’s not actually the way we tend to map between the two with real dice, though. We’ll define a handy helper function, subst, which changes one specific outcome to another, and use this to show that reading a 0 on the low die as a 10 accomplishes the same effect:

subst :: Eq a => a -> a -> EventM a -> EventM a subst tgt rep = fmap (\x -> if x == tgt then rep else x)

> replace 0 10 z10 == d10 True

We can also check various ways for converting two d10/z10 to a d100, for example:

> z10 * 10 + d10 == d100 True > subst 0 100 (z10 * 10 + z10) == d100 True

Further Queries

As well as checking equality of the entire roll, we can query specific probabilities. The chancePred function checks the chance of a particular predicate being satisfied:

> chancePred (>= 16) (3*d6) 5 % 108

So 5/108 chance of getting 16 or higher when rolling 3 six-sided dice. You can use the similar function chanceRel to check the chance that a particular relation between two events is satisfied. For example, you might want to know the chance of a d20 beating 3d6:

> chanceRel (>) d20 (3*d6) 19 % 40

The Full Monad

EventM is a monad (and functor and applicative functor, of course). This means you can perform all sorts of complicated combinations of dice — for example, if you want to prototype a game involving dice, you can write your rules in Haskell and see the chances of various outcomes. To demonstrate this, I’m going to borrow an example from the library documentation. Bonus points if you spot which game this comes from without looking at the library docs.

You roll a given number of 10-sided dice, rolling one extra die for every 10 you score if you are specialised in that skill. The number of 1s on the original roll are subtracted from the total number of dice that equal or exceed the difficulty target:

successes :: Int -> Int -> Bool -> EventM Int

successes target dice specialised

= do initial <- replicateM dice d10

extra <- if specialised

then replicateM (count (== 10) initial) d10

else return []

return (count (>= target) (initial ++ extra) - count (== 1) initial)

where

count f xs = length (filter f xs)

The exact mechanics of that system caused much dispute back in the day with other players. I still may not have it correct now, but I can tell you that an unambiguous description like the above would have prevented much confusion! I wonder if we could get all game-writers to write down their intricate dice-based systems in Haskell.

The Inner Workings — Num

Finally, I’ll explain the inner workings of the probability type. You can think of EventM a as being a synonym for Map a Rational, where the Rational type represents a probability as explained earlier. (Due to some problems with type-class constraints, it has to be held in a list, but same idea.) We can imagine fromList and toList functions (like those for Map) to help us in this section:

toList :: EventM a -> [(a, Rational)] fromList :: [(a, Rational)] -> EventM a

We’ll assume that fromList adds the probabilities associated with any duplicates in the list, i.e. it acts like Map.fromListWith (+). Armed with these, I’ll explore the Num instance for EventM Int (aka DieRoll).

Addition and subtraction

Let’s start with: what does it mean to add two dice? Each combination of the two dice must have their probabilities multiplied, with their outcomes added, and any duplicate outcomes (e.g. 1 and 6 gives the same outcome as 2 and 5) must be added together at the end (which we’ve said fromList will do):

addDice :: DieRoll -> DieRoll -> DieRoll addDice a b = fromList [(ax+bx, ap*bp) | (ax,ap)<-toList a, (bx,bp)<-toList b]

Subtracting one die from another is trivially similar. So how do we add constants to a die? Well, one way to do it — and perhaps in a sense the most obvious way — is to use EventM’s functor instance. Using fmap on EventM a applies a pure function to all the outcomes of the die, so fmap (+3) would add the constant value 3 to each outcome. However, there is another way. We can convert 3 to a die by making it a certain outcome:

certain:: a -> EventM a certain x = fromList [(x, 1)]

So every time you roll the “die” certain 3, it will come up with a 3 (talk about a loaded die!) Now think about the result of addDice (certain 3) d; all the probabilities of the second die will be multiplied by 1, leaving them untouched, while the outcomes will have 3 added to them: exactly the effect we wanted. So hopefully you can see that we can form a Num instance for our dice (which we’ve used throughout this post), where (+) = addDice, negate = fmap negate, and fromInteger = certain . fromInteger. But what about multiplication?

Multiplication

From a user’s perspective, multiplication of, say, 2 and d6 could be taken to mean one of two things — either: roll one d6 and multiply all outcomes by two, or: roll two d6 and add them together. We can implement the former using our certain function and a trivial modification to addDice:

multDice :: DieRoll -> DieRoll -> DieRoll multDice a b = fromList [(ax*bx, ap*bp) | (ax,ap)<-toList a, (bx,bp)<- toList b]

It should be obvious from the definition that this function is commutative. multDice (certain 2) d6 multiplies all outcomes of the d6 by 2, and multDice d6 (certain 2) does the same. multDice (certain 3) (certain 4) is the certain outcome 12, and multDice d4 d4 rolls two d4 and multiplies their outcomes, giving this interesting distribution:

1 : # 2 : ## 3 : ## 4 : ### 6 : ## 8 : ## 9 : # 12: ## 16: #

The multDice function is a consistent, commutative definition of multiplication. But it holds a hidden surprise for the user if we use this for our definition of (*): 2 * d6 is not the same as d6 + d6! The former doubles the outcome of one d6 (if we use multDice), whereas the latter adds the outcomes of two d6. I think this is sufficiently surprising that it is worth exploring the other alternative: what if we define a function that takes its first argument and rolls that many of its second argument (summing the outcomes):

rollN :: DieRoll -> DieRoll -> DieRoll

rollN a b = do n <- a

sum <$> replicateM n b

That uses the monad instance, which I haven’t got into much in this post, but hopefully its meaning is clear: find out the result of the first die roll, then roll that many of the second die, summing the results. We can work out that rollN (certain 2) d6 is the same as d6 + d6. What do you think the result will be of rollN d6 (certain 2)? Well, we roll the d6, then that many times we receive the certain outcome 2, and add all these together… which means we effectively scale the d6 result by 2 — the other behaviour we were using earlier! rollN (certain 3) (certain 4) is still the certain outcome 12, while rollN d4 d4 rolls one d4 to determine how many further d4 to roll, giving a rather complex distribution!

This rollN function has a nice parallel to dice notation where 2d6 means roll two d6, whereas d6 * 2 means double the result of a single d6. So even though it’s not commutative, I prefer this multiplication operator for our dice EDSL. (I believe that rollN is associative, but I don’t have a proof for this.) So (*) = rollN in my library, as being the most useful of two potentially-confusing options. The documentation tries to make clear how it works.

Summary

The library/EDSL is up on Hackage now for you to experiment with. I mainly intend it to be played with using ghci or small Haskell files for experimenting with dice combinations and calculating odds, but there’s nothing stopping it being used for concisely specified actual dice in some RPG-related program. The library uses some functionality from the the probability library developed by Martin Erwig, Steve Kollmansberger and maintaing by Henning Thielemann. The title of this post no doubt invites disagreement, but I couldn’t resist the rhyme.